Follow Us:

Why Is Dynamic Programming Better Than Most Of the Algorithms?

In computer science, Dynamic Programming solves complex problems by breaking them down into smaller subproblems. It is based on the principle that it is easier to solve a smaller problem than a larger one. In today’s blog, we will deep dive into this topic.

In today’s blog, I’ll try to cover everything about DP.

Introduction to Dynamic Programming:

If you have ever tried to come up with an efficient algorithm to solve a problem, you have probably run into the issue of trying to figure out how to divide the problem into subproblems. Once you have divided the problem into subproblems, you need to find a way to combine the solutions to the subproblems into a solution to the original problem. This can be a difficult task, especially if the subproblems overlap.

Dynamic programming is a method for solving problems by breaking them down into smaller subproblems. It is a bottom-up approach that starts with the original problem and breaks it down into smaller subproblems. The solutions to the subproblems are then combined to solve the original problem.

The name “dynamic programming” comes from the problems being solved by breaking them down into smaller subproblems, which are then solved in a bottom-up fashion. This is, in contrast, to “divide and conquer” algorithms, which solve problems by dividing them into smaller subproblems and then solving them in a top-down fashion.

Dynamic programming algorithms are usually more efficient than divide and conquer algorithms, but they can be more difficult to design and implement.

Dynamic programming is usually used to solve optimization problems.

Optimization Problem:

An optimization problem is a problem in which we are trying to find the best solution from a set of possible solutions. In dynamic programming, we typically want to find the optimal solution, meaning the solution that is the best possible from all possible solutions.

To solve an optimization problem using dynamic programming, we first need to identify the subproblems. We then solve each subproblem and store the solutions in a table. Finally, we use the solutions to the subproblems to solve the original problem.

The key to dynamic programming is to carefully choose the subproblems to take advantage of the solutions to the subproblems. If we choose the subproblems wisely, we can often solve the original problem in much less time than it would take to solve it without using dynamic programming.

Dynamic programming is a powerful technique, but it is not always the best method to use. In some cases, it may be more efficient to solve a problem using a different technique, such as greedy algorithms or backtracking.

We’ll discuss it later, but before that, have you ever thought that,

Why is this algorithm called Dynamic Programming?

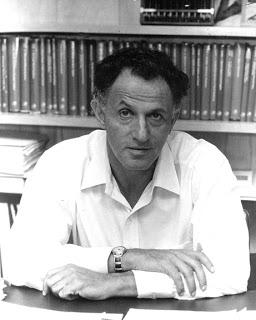

Image credit: Optlinealjur.blogspot.com

The term “dynamic programming” was first coined by Richard Bellman in the 1950s. Bellman was a mathematician looking for a way to optimize problems that could break down into smaller subproblems. He found that by breaking down a problem into smaller subproblems, he could then solve each subproblem independently and combine the solutions to the subproblems to solve the original problem.

Richard Bellman chose the word “dynamic” to describe his dynamic programming algorithm because it captures the essence of the algorithm: namely, it is a method for solving problems by breaking them down into smaller subproblems. The word dynamic also has a connotation of change and motion, appropriate for a constantly evolving algorithm as it solves a problem. Also, the name “dynamic” sounded impressive. So he named this Dynamic algorithm Programming.

How does dynamic programming work?

A dynamic programming algorithm is a powerful technique for solving problems that can be expressed as a recurrence. A recurrence is a mathematical equation that defines a function in terms of itself. For example, the Fibonacci sequence is defined by the recurrence F(n) = F(n-1) + F(n-2), where F(0) = 0 and F(1) = 1.

We can use dynamic programming to solve problems that are not expressible as a recurrence. But it is most powerful when used to solve problems that can be expressed as a recurrence.

The key to understanding dynamic programming is to realize that solving the entire problem at once is not necessary. We can solve the subproblems in any order. Once all subproblems have been solved, We can assemble the solution to the original problem.

Dynamic programming is a bottom-up approach to problem-solving. It begins by solving the simplest subproblem and then uses the solutions to the subproblems to solve the next-simplest subproblem, and so on until the original problem is solved.

The key to dynamic programming is to recognize when We can break down a problem into subproblems and store the solutions to the subproblems to reuse them.

Dynamic programming is most useful for problems with an inherent structure that it can exploit. For example, many optimization problems can be expressed as a graph, where the nodes represent states and the edges represent transitions between states.

We can use dynamic programming to find the shortest path through a graph. We can also use it to find the shortest path that visits all of the nodes in a graph.

The dynamic programming approach is to start at the beginning and work your way forward, keeping track of the best path at each stage. When you reach the end, you will have the best path from start to finish.

The advantage of dynamic programming is that it is guaranteed to find the best solution if one exists. The disadvantage is that it can be time-consuming and memory-intensive, especially for large problems.

When to use DP?

Dynamic programming is a versatile tool that can be used to solve a wide variety of problems. In general, dynamic programming can be used to solve problems that can be broken down into smaller subproblems. Additionally, dynamic programming can be used to solve problems that have an optimal substructure, meaning that the optimal solution to the overall problem can be found by solving the subproblems.

Dynamic programming is particularly well-suited for problems that involve making decisions. Many problems that involve making decisions can be represented as a decision tree. In a decision tree, each node represents a point at which a decision must be made, and the edges represent the possible outcomes of that decision.

Dynamic programming can be used to solve many other types of problems as well, such as the traveling salesman problem and the minimum cost flow problem. It is a powerful technique that can be used to find optimal solutions to a wide variety of problems.

Now, let’s talk about the advantages and disadvantages of this algorithm.

Advantages of DP:

DP algorithms are typically faster than naive algorithms, and We can use them to solve problems that are too difficult for traditional methods.

We can use DP to solve many different types of problems, including:

• Finding the shortest path between two points

• Optimizing a manufacturing process

• Planning the most efficient route for a delivery truck

• Scheduling tasks to minimize conflicts

• Deciding when to buy and sell stocks

And many more.

Disadvantages of DP:

Now let’s talk about the disadvantages of DP.

DP is usually applied to optimization problems. For example, a dynamic programming algorithm will examine all possible ways to solve a problem and choose the best solution. However, this can be time-consuming, and the algorithm may not always find the best solution.

Another disadvantage of dynamic programming is that the subproblems must be independent. This means that the solution to one subproblem must not depend on another subproblem. This can be a difficult constraint to satisfy.

DP can be a powerful tool for solving problems, but its limitations. Therefore, it is important to understand these limitations before using dynamic programming to solve a problem.

Okay, so these are all about DP. As we’ve discussed this ” DP ” algorithm, we’ve got a little bit of idea about the algorithm. So, now let’s compare it with other algorithms.

Let’s do it.

Comparing DP with other algorithms:

Wait, we’ve talked about DP, but we didn’t talk about the other algorithm that we will compare with DP. So, let’s know a little bit about those algorithms first.

1. Divide & Conquer:

Divide and conquer algorithms are a powerful tool for solving problems. By breaking a problem down into smaller pieces, we can more easily find a solution. These algorithms are especially useful for problems that are too difficult to solve directly. By breaking the problem into smaller pieces, we can more easily find a solution that works for all the pieces. Many famous examples of divide and conquer algorithms, such as the quicksort algorithm. This algorithm is used to sort a list of items. It works by choosing a pivot element and then partitioning the list around the pivot. The left side of the list contains all the elements less than the pivot, and the right side contains all the elements greater than the pivot. The quicksort algorithm then recursively sorts the left and right sides of the list.

2. Backtracking:

A backtracking algorithm is a method of solving a problem by trying to build a solution incrementally. One piece at a time while keeping track of all the choices made along the way so that it can backtrack and undo a choice if it leads to a dead end. The advantage of a backtracking algorithm is that it is guaranteed to find a solution if one exists, and it can do so relatively quickly. The disadvantage is that it can sometimes get caught in an infinite loop, trying the same unsuccessful choices repeatedly. Backtracking algorithms are often used in constraint satisfaction problems, such as Sudoku, crosswords, and other puzzles. We can also use them in general search problems, such as finding a path through a maze or a graph.

3. Greedy:

A greedy algorithm is an algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage with the hope of finding a global optimum. For example, a greedy algorithm for the traveling salesman problem would try to find the shortest path by always choosing the shortest edge from the current vertex. The greedy algorithms are used in optimization problems. When applying a greedy algorithm to an optimization problem, choices are made in a way that is optimized for the current problem without considering the effect of these choices on future problems. This can lead to the algorithm making sub-optimal choices, as future problems may be more difficult due to the choices made in the past. A classic example of a greedy algorithm is Kruskal’s algorithm for finding the minimum spanning tree of a graph. This algorithm starts with an empty graph and adds edges in order of increasing weight until a spanning tree is found. The edges are added so that, at each step, the resulting graph is always a tree (i.e., there are no cycles). The greedy algorithms are often used in conjunction with other techniques, such as dynamic programming to find the overall optimal solution to a problem. For instance, the greedy knapsack algorithm can be used to find an optimal solution to the 0-1 knapsack problem, but it is not guaranteed to find the global optimum. In this case, the algorithm would need to be combined with dynamic programming to find the overall best solution.

4. Recursion:

In computer science, recursion is a method of solving a problem by breaking it down into smaller and smaller subproblems. A recursive algorithm uses this approach. The most famous example of a recursive algorithm is the Tower of Hanoi, which can be used to solve a Towers of Hanoi puzzle. The algorithm moves the topmost disk from one peg to another and then moves the remaining disks from the other peg to the destination peg. We can use recursive algorithms to solve many different problems, including sorting, searching, and mathematical problems. They are particularly well suited for problems that can be divided into smaller subproblems similar to the original problem. The key to designing a recursive algorithm is identifying the base case, which is the simplest form of the problem that We can solve directly. For example, the base case for the Towers of Hanoi algorithm is when there is only one disk to move. Once the base case has been identified, the recursive algorithm can be designed to solve the problem by breaking it down into smaller subproblems until the base case is reached.

5. Memoization:

Memoization is an optimization technique used to speed up programs by storing the results of expensive function calls and returning the cached result when the same inputs occur again. In many cases, memoization can dramatically improve a program’s performance. For example, consider a program that must repeatedly calculate the Fibonacci numbers. Each Fibonacci number is the sum of the previous two, so the calculation can be quite expensive. However, if we memoize the results, we can avoid recalculating the numbers that have already been calculated. There are many different ways to memoize a function, but the basic idea is always to store the results of expensive function calls and return the cached result when the same inputs occur again. One common way to memoize a function is to use a hash table. We first check to see if the input is in the hash table when the function is called. If it is, we return the stored result. If not, we calculate the result and store it in the hash table before returning it. Another common way to memoize a function is to use a cache. A cache is a data structure designed to hold frequently used data. We first check to see if the input is in the cache when the function is called. If it is, we return the stored result. If not, we calculate the result and add it to the cache before returning it. Memoization is a powerful optimization technique, but it is not always the best choice. In some cases, memoization can make a program slower. For example, if a function is called with different inputs many times, the overhead of storing and retrieving the results from a hash table or cache can be significant. Therefore, it is important to choose an appropriate optimization technique for the problem at hand. Memoization is a valuable tool that We can use to speed up programs in many cases, but it is not always the best choice.

Okay, so now we’re good to go.

1. DP vs. Divide and Conquer:

| Dynamic Programming | Divide and Conquer |

|---|---|

| Dynamic programming solves the problem by starting from the simplest subproblem and then gradually building up to the original problem. This approach works well for problems where We can use the solution to a subproblem to solve a larger problem. However, it can be difficult to identify the order in which We should solve the subproblems, and sometimes the subproblem solutions can become too complex. | Divide and conquer divides the problem into smaller subproblems, solves each subproblem, and then combines the solutions to the subproblems to solve the original problem. This approach works well for problems that can be divided into independent subproblems. However, it can be difficult to identify these subproblems, and sometimes the subproblems can end up being just as difficult to solve as the original problem. |

| To be solved using Dynamic Programming, a problem need to be an optimized problem. This means it should be divided into subproblems that are dependent on each other. | To be solved using Divide and Conquer a problem need not be an optimized one. If the problem can be presented into smaller independent subproblems, then the Divide and Conquer method will be helpful. |

| With dynamic programming, the subproblems are solved in a bottom-up manner. That is, the smaller subproblems are solved first and the solutions are then used to solve the larger subproblems. | With divide and conquer, the subproblems are solved in a top-down manner. That is, the larger subproblems are solved first and the solutions are then used to solve the smaller subproblems. |

So, which algorithm is better? It depends on the problem. Dynamic programming is better when the subproblems overlap and divide and conquer is better when the subproblems do not overlap.

In general, dynamic programming is more difficult to implement than divide and conquer. However, it is usually more efficient. This is because, with dynamic programming, the subproblems are solved only once. With divide and conquer, the subproblems may be solved multiple times.

2. DP vs. Backtracking:

Dynamic programming and backtracking are both similar in that they allow you to explore all possible solutions to a problem and choose the best one. However, they differ in how they approach the problem.

Dynamic programming is a bottom-up approach to problem-solving. It starts with the simplest possible solution and then finds the best way to improve it. On the other hand, backtracking is a top-down approach. It starts with the most general solution and then finds the best way to specialize it.

We can use both dynamic programming and backtracking to solve the same problems. However, dynamic programming is usually faster and more efficient. It is also easier to code and debug.

If you are trying to decide which approach to use for a problem, it is generally best to try dynamic programming first. If that doesn’t work, then you can try backtracking.

3. DP vs. Greedy:

Dynamic programming is usually used for optimization problems. An optimization problem is where we want to find the best solution from a set of possible solutions. For example, we might want to find the shortest path from one city to another.

The key to dynamic programming is to realize that we can solve the smaller subproblems first and then use those solutions to solve the larger problem. This is usually done by creating a table or array of solutions to the subproblems.

The most famous example of dynamic programming is the Fibonacci sequence. To find the nth Fibonacci number, we first find the n-1th and n-2th Fibonacci numbers and then add them together.

The greedy algorithm is a technique for solving problems where we make the locally optimal choice at each step to find the global optimum. For example, consider the problem of finding the shortest path from one city to another.

A greedy algorithm would always choose the shortest path at each step without considering the effects of its choices on future steps. So, it might choose a shorter path in the short term but much longer in the long term.

The greedy algorithm is often used in optimization problems, but it is not guaranteed to find the global optimum. As a result, it is often not very effective.

The main difference between dynamic programming and the greedy algorithm is that dynamic programming is a bottom-up approach while the greedy algorithm is a top-down approach. We break the problem down into smaller subproblems and solve them first with dynamic programming. With the greedy algorithm, we make the locally optimal choice at each step without considering the global picture.

4. DP vs. Recursion:

Dynamic Programming (DP) is a technique for solving problems that exhibit the characteristics of overlapping sub-problems and optimal substructure. In other words, We can decompose DP problems into smaller sub-problems which can be further decomposed into even smaller sub-problems. Each sub-problem is solved only once, and the solutions to the sub-problems are stored in a table so that We can reuse them (hence the name “dynamic programming”).

Recursion is a programming technique that allows a function to call itself. A function that calls itself is said to be “recursive”. Recursion is used to solve problems that We can decompose into smaller sub-problems of the same type.

The main difference between DP and recursion is that DP uses a bottom-up approach to solving problems while recursion uses a top-down approach. In DP, the smaller sub-problems are solved first, and the solutions are stored in a table. The solutions to the larger sub-problems are then computed from the solutions to the smaller sub-problems. On the other hand, in recursion, the larger sub-problems are solved first, and the solutions are then computed for the smaller sub-problems.

Another difference between DP and recursion is that DP problems can have more than one solution, while recursion problems can have only one solution. This is because We can decompose DP problems into smaller sub-problems which can be further decomposed into even smaller sub-problems. Each sub-problem can be solved in more than one way, and We can combine the solutions to the sub-problems to give more than one solution to the original problem. On the other hand, a recursion is a top-down approach, and the solutions to the sub-problems are computed one at a time. Therefore, recursion problems can have only one solution.

Finally, DP is usually faster than recursion. DP uses a bottom-up approach, and the solutions to the smaller sub-problems are stored in a table. We can then compute the solutions to the larger sub-problems from the solutions to the smaller sub-problems. On the other hand, recursion is a top-down approach, and the solutions to the sub-problems are computed one at a time. Therefore, recursion is usually slower than DP.

Now, the last one-

5. DP vs. Memoization:

Dynamic programming is a technique for solving problems by breaking them down into smaller subproblems. Memoization is a technique for storing the results of expensive function calls and reusing them when the same inputs occur again.

Both dynamic programming and memoization are powerful tools for solving complex problems. However, there are some key differences between the two techniques.

Dynamic programming is a bottom-up approach to problem-solving. It starts with the smallest subproblem and works its way up to the original problem. Memoization is a top-down approach. It starts with the original problem and breaks it down into smaller subproblems.

Dynamic programming is typically used for problems that have an inherent order. That is, We must solve the subproblems in a specific order. However, memoization can be used for any problem, including those without an inherent order.

Dynamic programming usually results in a single final solution. However, memoization can result in multiple solutions, depending on how the memoized values are used.

Dynamic programming is typically used for problems that We can break down into smaller subproblems. On the other hand, memoization is often used for problems that cannot be easily divided into smaller subproblems.

Dynamic programming is a powerful technique for solving complex problems. However, it has some limitations. It only works for problems that can be divided into smaller subproblems. Additionally, it can be time-consuming to find the optimal solution. Memoization is also a powerful technique, but it has different limitations. We can only use it for problems that have already been solved. Additionally, memoization can speed up the execution of a program, but We can’t use it to find the optimal solution.

So, that’s all for this blog, and I hope this blog was not bored you and provided some valuable information about Dynamic Programming for you. If you found this blog useful, then share this blog with your friends too.

See you in the next blog.