A CSS parser is a fundamental tool in web development, enabling developers to analyze, manipulate, and even transform CSS styles dynamically. But how does a CSS parser work under the hood? More importantly, how can you build one from scratch using JavaScript?

Understanding CSS parsing is essential for tasks like building custom style preprocessors, enforcing coding standards, and optimizing stylesheets for performance. While modern browsers have built-in CSS parsers, creating one yourself provides deeper insights into how CSS is structured and processed.

This guide will walk you through the process of building a CSS parser in JavaScript, starting from breaking down raw CSS into tokens, structuring it into an abstract syntax tree (AST), and finally making it usable for transformations or analysis. Whether you’re a developer aiming to customize CSS processing or just curious about parsing techniques, by the end of this guide, you’ll have a working CSS parser and a solid understanding of how CSS is interpreted programmatically.

Before we dive in, ask yourself: What happens when you load a CSS file into a browser? How does the browser read and apply styles? This knowledge will be useful as we step into lexing, parsing, and syntax trees.

Understanding CSS Parsing

Writing CSS is one thing; understanding how it is processed is another. When you add styles to a webpage, the browser doesn’t just read the CSS and apply it directly—it interprets it, breaking it down into structured components before rendering the final design. This process, known as CSS parsing, plays a crucial role in ensuring that styles are applied correctly and efficiently. But what does CSS parsing actually involve? And how do browsers handle it under the hood? Let’s break it down.

What is CSS Parsing?

CSS parsing is the process of analyzing and converting raw CSS code into a structured format that a browser can understand. Think of it like translating a set of instructions written in a language into a format that a machine can process. The parser reads the CSS, identifies key components such as selectors, properties, and values, and structures them in a way that allows the browser to render styles accurately.

However, CSS parsing isn’t just about reading styles; it also involves validation and error handling. Unlike JavaScript, which stops execution when it encounters an error, CSS parsers are designed to be fault-tolerant. This means that even if a stylesheet contains invalid rules, the browser ignores them and continues parsing the rest of the file.

How do Browsers Parse CSS?

Every time you load a webpage, the browser goes through a well-defined process to interpret and apply CSS. This happens in multiple steps, each playing a critical role in rendering styles correctly.

- Tokenization (Lexical Analysis)

The first step in CSS parsing is tokenization, where the raw CSS code is broken down into smaller, meaningful components called tokens. These tokens represent selectors, properties, values, and other syntax elements. For example, when parsing the rule:body { background-color: #f5f5f5; }The parser identifies tokens such asbody(selector),{(block start),background-color(property), and#f5f5f5(value). - Parsing into an Abstract Syntax Tree (AST)

Once the CSS is tokenized, the parser structures these tokens into an Abstract Syntax Tree (AST). This is a hierarchical representation of CSS rules, where each node in the tree represents a different part of the stylesheet. The AST helps the browser understand relationships between different rules and declarations. - Building the CSS Object Model (CSSOM)

The AST is then transformed into the CSS Object Model (CSSOM), which is an in-memory representation of all styles. Unlike raw CSS files, the CSSOM is structured in a way that allows the browser to efficiently calculate which styles apply to which elements. - Applying Styles and Constructing the Render Tree

The browser combines the CSSOM with the DOM (Document Object Model) to create the Render Tree—a structure that represents all visible elements on the page along with their computed styles. - Layout Calculation and Painting

Finally, the browser determines the position and dimensions of each element (layout) before painting the final visual representation onto the screen. Any changes to the CSS at runtime (e.g., via JavaScript) trigger re-calculations, which can impact performance.

Challenges in CSS Parsing

While the parsing process seems straightforward, it comes with its own set of challenges:

- Handling Invalid CSS

Since CSS parsers are fault-tolerant, they must decide how to handle incorrect syntax. Unlike JavaScript, where an error can break execution, CSS simply skips invalid rules, which can sometimes lead to unintended styles. - Selector Specificity and Cascade Rules

Browsers must resolve conflicts when multiple CSS rules apply to the same element. Specificity, importance (!important), and the cascade determine which styles take precedence, making the parsing process more complex. - Performance Considerations

Large stylesheets and complex selectors slow down rendering. The browser must optimize CSSOM construction to ensure styles are applied efficiently without causing performance bottlenecks. - Dynamic CSS Modifications

When JavaScript modifies styles dynamically, it forces the browser to re-parse CSS, update the CSSOM, and sometimes trigger a reflow and repaint. This is why poorly optimized dynamic styling can degrade page performance.

Why Writing Good CSS Matters

Parsing is at the heart of how CSS works. A well-structured, optimized stylesheet not only ensures a visually appealing design but also improves performance. Bloated or inefficient CSS can slow down page rendering, affecting both user experience and SEO rankings.

This is why writing CSS with clarity and efficiency is essential. It’s not just about making things look good; it’s about ensuring styles are applied smoothly without unnecessary delays. If you want to dive deeper into how CSS parsing works and why it’s important, check out this detailed breakdown: CSS Parsing Explained.

Setting Up the Development Environment

Before we start building a CSS parser, we need to set up a structured development environment. Writing a parser from scratch requires a clean workspace with the right tools, ensuring efficiency and maintainability. In this section, we will go through the necessary tools, project setup, and dependencies required for the parser.

Choosing the Right Tools

Building a CSS parser in JavaScript doesn’t require an extensive setup, but choosing the right tools can make the process smoother. Here’s what we need:

- Node.js and npm: Since we are writing the parser in JavaScript, Node.js will allow us to execute the code outside the browser. The Node Package Manager (npm) will help in managing dependencies.

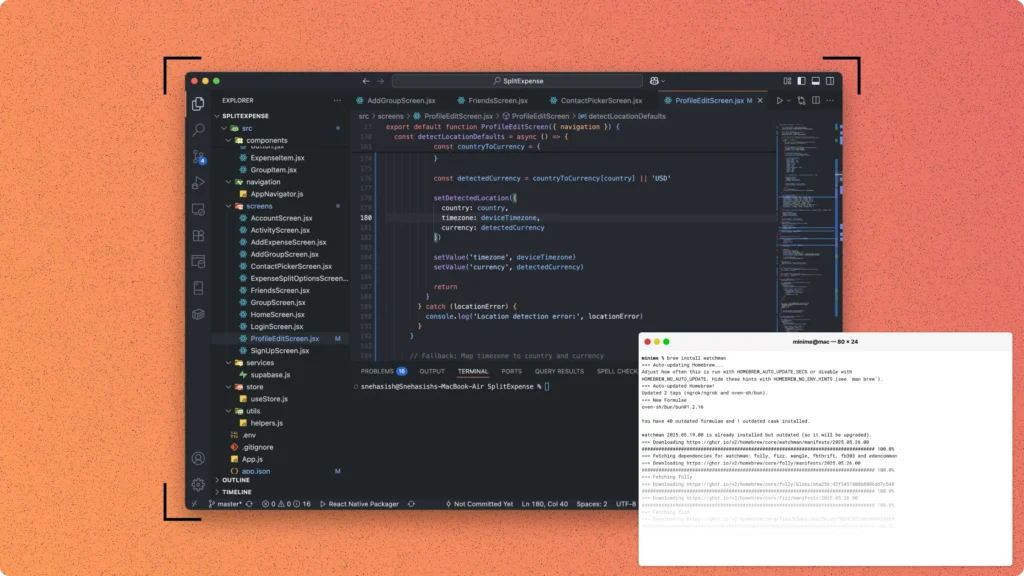

- A Code Editor: A lightweight, feature-rich editor like VS Code is ideal. It provides syntax highlighting, debugging support, and terminal integration.

- Git for Version Control: Using Git ensures we can track changes and revert to previous states if needed. It also makes collaboration easier.

- Testing Framework: Testing is a critical part of parser development. We can use Jest or Mocha to validate different parsing scenarios.

Installing Node.js and Setting Up a Project

If Node.js is not installed, download it from nodejs.org. Once installed, verify it by running:

node -v

npm -vNext, we will initialize a new project:

mkdir css-parser

cd css-parser

npm init -yThis command creates a new directory, navigates into it, and initializes a package.json file. The -y flag accepts default settings, but you can edit the file later to customize project details.

Installing Essential Dependencies

Now, let’s install the dependencies required for our parser:

npm install jest --save-devThis installs Jest as a development dependency, which will be used for writing and running test cases. If you prefer Mocha, install it instead:

npm install mocha chai --save-devAdditionally, we may need a utility library like lodash for working with objects and arrays efficiently:

npm install lodashSetting Up a File Structure

To keep the project organized, let’s create a structured file layout:

css-parser/

│── src/

│ ├── lexer.js

│ ├── parser.js

│ ├── cssom.js

│── tests/

│ ├── lexer.test.js

│ ├── parser.test.js

│── package.json

│── README.mdsrc/contains the core modules:lexer.js: Breaks down CSS into tokens.parser.js: Converts tokens into a structured format.cssom.js: Builds the CSS Object Model representation.

tests/houses unit tests to ensure correctness.package.jsonmanages dependencies and scripts.README.mdprovides documentation.

Configuring the Testing Framework

For Jest, add the following script in package.json:

"scripts": {

"test": "jest"

}For Mocha, modify it as follows:

"scripts": {

"test": "mocha"

}Now, we can run tests using:

npm testWriting a Simple Test

Before diving into the parser, let’s write a basic test in tests/lexer.test.js to ensure everything is working:

const { tokenize } = require('../src/lexer');

test('Tokenizes simple CSS rule', () => {

const input = 'body { color: black; }';

const tokens = tokenize(input);

expect(tokens.length).toBeGreaterThan(0);

});

Run the test to verify that our setup is functioning correctly.

With the development environment ready, we now have a structured workspace to begin writing the lexer and parser. Next, we will implement the lexer, the first step in breaking down CSS code into tokens.

Building the Lexer (Tokenizer)

A CSS parser starts with breaking down raw CSS code into smaller, manageable pieces. This is the job of the lexer, also known as the tokenizer. The lexer reads CSS code character by character and converts it into structured components called tokens, which are then used by the parser to understand the CSS structure. Without a lexer, a parser would have to work with raw text, making the process inefficient and difficult to manage.

But how does a lexer work? Let’s walk through the process of building one in JavaScript.

What is a Lexer?

A lexer is a program that scans a text input and groups sequences of characters into meaningful tokens. In the case of CSS, these tokens include:

- Selectors (e.g.,

body,.container) - Braces (

{,}) - Properties (e.g.,

color,font-size) - Values (e.g.,

black,16px) - Colons and Semicolons (

:,;) - Comments (

/* this is a comment */)

Each of these tokens represents a building block of CSS, allowing the parser to make sense of the code structure.

Breaking Down CSS into Tokens

Before writing any code, let’s visualize how a lexer processes a CSS rule:

body {

color: black;

font-size: 16px;

}The lexer should break this down into the following tokens:

| Token Type | Value |

|---|---|

| Selector | body |

| Left Brace | { |

| Property | color |

| Colon | : |

| Value | black |

| Semicolon | ; |

| Property | font-size |

| Colon | : |

| Value | 16px |

| Semicolon | ; |

| Right Brace | } |

This structured representation makes it easier for the parser to build a CSS object model later.

Implementing the Lexer in JavaScript

Now, let’s build the lexer step by step.

Step 1: Defining Token Types

First, define all the token types that our lexer should recognize. Create a new file called src/tokens.js:

const TOKEN_TYPES = {

SELECTOR: 'SELECTOR',

LEFT_BRACE: 'LEFT_BRACE',

RIGHT_BRACE: 'RIGHT_BRACE',

PROPERTY: 'PROPERTY',

COLON: 'COLON',

VALUE: 'VALUE',

SEMICOLON: 'SEMICOLON',

COMMENT: 'COMMENT',

WHITESPACE: 'WHITESPACE'

};

module.exports = TOKEN_TYPES;

Step 2: Writing the Lexer Function

Now, create src/lexer.js and start implementing the lexer function.

const TOKEN_TYPES = require('./tokens');

function tokenize(input) {

let tokens = [];

let current = 0;

while (current < input.length) {

let char = input[current];

// Ignore whitespace

if (/\s/.test(char)) {

current++;

continue;

}

// Handle comments (/* ... */)

if (char === '/' && input[current + 1] === '*') {

let comment = '';

current += 2;

while (current < input.length && !(input[current] === '*' && input[current + 1] === '/')) {

comment += input[current];

current++;

}

current += 2; // Skip closing */

tokens.push({ type: TOKEN_TYPES.COMMENT, value: comment });

continue;

}

// Handle selectors (e.g., body, .container)

if (/[a-zA-Z.#]/.test(char)) {

let selector = '';

while (/[a-zA-Z0-9.#-]/.test(char)) {

selector += char;

char = input[++current];

}

tokens.push({ type: TOKEN_TYPES.SELECTOR, value: selector });

continue;

}

// Handle property names (e.g., color, font-size)

if (/[a-zA-Z-]/.test(char)) {

let property = '';

while (/[a-zA-Z-]/.test(char)) {

property += char;

char = input[++current];

}

tokens.push({ type: TOKEN_TYPES.PROPERTY, value: property });

continue;

}

// Handle colons (:)

if (char === ':') {

tokens.push({ type: TOKEN_TYPES.COLON, value: char });

current++;

continue;

}

// Handle values (e.g., black, 16px)

if (/[a-zA-Z0-9#.%]/.test(char)) {

let value = '';

while (/[a-zA-Z0-9#.%]/.test(char)) {

value += char;

char = input[++current];

}

tokens.push({ type: TOKEN_TYPES.VALUE, value });

continue;

}

// Handle semicolons (;)

if (char === ';') {

tokens.push({ type: TOKEN_TYPES.SEMICOLON, value: char });

current++;

continue;

}

// Handle braces ({ and })

if (char === '{') {

tokens.push({ type: TOKEN_TYPES.LEFT_BRACE, value: char });

current++;

continue;

}

if (char === '}') {

tokens.push({ type: TOKEN_TYPES.RIGHT_BRACE, value: char });

current++;

continue;

}

throw new Error(`Unexpected character: ${char}`);

}

return tokens;

}

module.exports = tokenize;

Step 3: Testing the Lexer

To ensure the lexer works correctly, let’s write a test in tests/lexer.test.js:

const tokenize = require('../src/lexer');

const TOKEN_TYPES = require('../src/tokens');

test('Tokenizes a basic CSS rule', () => {

const input = 'body { color: black; }';

const expectedTokens = [

{ type: TOKEN_TYPES.SELECTOR, value: 'body' },

{ type: TOKEN_TYPES.LEFT_BRACE, value: '{' },

{ type: TOKEN_TYPES.PROPERTY, value: 'color' },

{ type: TOKEN_TYPES.COLON, value: ':' },

{ type: TOKEN_TYPES.VALUE, value: 'black' },

{ type: TOKEN_TYPES.SEMICOLON, value: ';' },

{ type: TOKEN_TYPES.RIGHT_BRACE, value: '}' }

];

expect(tokenize(input)).toEqual(expectedTokens);

});

Run the test with:

npm testIf everything is implemented correctly, the test should pass.

Now that we have a working lexer, we can move on to developing the parser, which will take these tokens and convert them into a structured representation.

Managing Nested Rules and Media Queries

Now that we have a working parser, we need to enhance its capabilities to handle nested rules and media queries. CSS allows for hierarchical structures, particularly when using preprocessors like SCSS or frameworks that support nested syntax. Additionally, media queries are essential for making styles responsive based on device characteristics.

If our parser only handles simple CSS rules, it won’t be able to process styles like these:

.container {

color: black;

.child {

color: blue;

}

@media (max-width: 600px) {

color: gray;

}

}

Without proper handling, these nested rules will break our parser. Let’s extend our implementation to support them.

Understanding Nested Rules and Media Queries

What are Nested Rules?

Nested rules allow child elements to be defined within their parent. While native CSS does not support this syntax, preprocessors like SCSS do. Browsers eventually convert nested rules into flat CSS.

For example, the following SCSS:

.container {

color: black;

.child {

color: blue;

}

}

Compiles into:

.container {

color: black;

}

.container .child {

color: blue;

}

Our parser should recognize nested structures and represent them correctly in the Abstract Syntax Tree (AST).

What are Media Queries?

Media queries allow conditional styling based on screen size, device type, or other properties.

Example:

@media (max-width: 600px) {

body {

background-color: gray;

}

}

This means:

- When the screen width is 600px or less, the background color of

<body>should be gray. - Otherwise, the default styles apply.

Since media queries contain multiple CSS rules, our parser needs to group them properly.

Enhancing the Parser to Handle Nested Rules and Media Queries

We now need to modify our parser to correctly process nested structures. Let’s break this down step by step.

Updating the AST Structure

We need to adjust the Abstract Syntax Tree (AST) to accommodate nested rules and media queries.

For example, given the CSS:

.container {

color: black;

.child {

color: blue;

}

@media (max-width: 600px) {

color: gray;

}

}

Our AST should look like this:

{

"type": "Stylesheet",

"rules": [

{

"type": "Rule",

"selector": ".container",

"declarations": [

{ "property": "color", "value": "black" }

],

"children": [

{

"type": "Rule",

"selector": ".child",

"declarations": [

{ "property": "color", "value": "blue" }

]

},

{

"type": "MediaQuery",

"condition": "(max-width: 600px)",

"rules": [

{

"type": "Rule",

"selector": ".container",

"declarations": [

{ "property": "color", "value": "gray" }

]

}

]

}

]

}

]

}

Updating the Parser Logic

Now, we update parser.js to handle nested rules and media queries.

Modify parseRule() inside src/parser.js:

function parseRule(tokens) {

let selectorToken = tokens[current];

if (selectorToken.type !== 'SELECTOR') {

throw new Error(`Expected selector but found ${selectorToken.type}`);

}

let rule = {

type: 'Rule',

selector: selectorToken.value,

declarations: [],

children: []

};

current++; // Move past selector

if (tokens[current].type !== 'LEFT_BRACE') {

throw new Error('Expected "{" after selector');

}

current++; // Move past {

while (tokens[current].type !== 'RIGHT_BRACE') {

if (tokens[current].type === 'SELECTOR') {

rule.children.push(parseRule(tokens)); // Handle nested rule

} else if (tokens[current].type === 'MEDIA') {

rule.children.push(parseMediaQuery(tokens)); // Handle media query

} else {

rule.declarations.push(parseDeclaration(tokens));

}

}

current++; // Move past }

return rule;

}

Adding Media Query Support

Now, let’s write the parseMediaQuery() function:

function parseMediaQuery(tokens) {

let mediaToken = tokens[current];

if (mediaToken.type !== 'MEDIA') {

throw new Error(`Expected "@media" but found ${mediaToken.type}`);

}

let mediaQuery = {

type: 'MediaQuery',

condition: tokens[++current].value, // Media condition (e.g., max-width: 600px)

rules: []

};

current++; // Move past media condition

if (tokens[current].type !== 'LEFT_BRACE') {

throw new Error('Expected "{" after media query condition');

}

current++; // Move past {

while (tokens[current].type !== 'RIGHT_BRACE') {

mediaQuery.rules.push(parseRule(tokens));

}

current++; // Move past }

return mediaQuery;

}

Writing Tests for Nested Rules and Media Queries

Modify tests/parser.test.js to include test cases for nested rules and media queries:

const parse = require('../src/parser');

const tokenize = require('../src/lexer');

test('Parses nested rules correctly', () => {

const input = `

.container {

color: black;

.child {

color: blue;

}

}

`;

const tokens = tokenize(input);

const ast = parse(tokens);

expect(ast).toEqual({

type: 'Stylesheet',

rules: [

{

type: 'Rule',

selector: '.container',

declarations: [{ property: 'color', value: 'black' }],

children: [

{

type: 'Rule',

selector: '.child',

declarations: [{ property: 'color', value: 'blue' }]

}

]

}

]

});

});

test('Parses media queries correctly', () => {

const input = `

@media (max-width: 600px) {

body {

background-color: gray;

}

}

`;

const tokens = tokenize(input);

const ast = parse(tokens);

expect(ast).toEqual({

type: 'Stylesheet',

rules: [

{

type: 'MediaQuery',

condition: '(max-width: 600px)',

rules: [

{

type: 'Rule',

selector: 'body',

declarations: [{ property: 'background-color', value: 'gray' }]

}

]

}

]

});

});

Run the tests:

npm testIf everything is implemented correctly, the tests should pass.

Handling nested rules and media queries is essential for a fully functional CSS parser. Without these features, we wouldn’t be able to parse modern CSS or preprocessors correctly. Efficient parsing leads to better CSS transformations, optimizations, and debugging.

Now our parser can handle nested CSS and media queries. The next step is error handling and validation, ensuring that malformed CSS is properly detected and reported.

Error Handling and Validation

A parser must not only interpret correctly written CSS but also handle errors gracefully. Unlike JavaScript, where a syntax error can stop execution entirely, CSS is designed to be fault-tolerant. If the browser encounters an invalid CSS rule, it simply ignores it and continues parsing the rest.

However, when building a custom CSS parser, we must explicitly detect errors and provide meaningful feedback. Without proper error handling, our parser could misinterpret rules, generate incorrect output, or even crash when processing malformed CSS.

Understanding CSS Error Handling

Errors in CSS usually fall into three categories:

- Syntax Errors: Missing braces, colons, semicolons, or incorrect rule structures.

- Unknown Properties or Values: Using invalid CSS properties or values that don’t exist.

- Incomplete Rules: Rules that are started but not properly closed.

Consider this incorrect CSS:

body {

color black

font-size: 16px;

}The parser should detect the missing colon (:) in color black, report it, and continue parsing the rest of the file.

Enhancing the Parser with Error Handling

To add error handling and validation, we need to update the parser functions to:

- Detect missing or misplaced syntax elements (e.g., missing

:in a declaration). - Track line numbers and positions for detailed error messages.

- Recover from errors by skipping problematic tokens and continuing parsing.

Let’s update our parser to handle these cases.

Step 1: Tracking Line Numbers

Errors should include line numbers to help developers locate issues. Modify lexer.js to keep track of line numbers while tokenizing:

function tokenize(input) {

let tokens = [];

let current = 0;

let line = 1;

while (current < input.length) {

let char = input[current];

if (char === '\n') {

line++;

current++;

continue;

}

if (/\s/.test(char)) {

current++;

continue;

}

if (/[a-zA-Z.#]/.test(char)) {

let value = '';

while (/[a-zA-Z0-9.#-]/.test(char) && current < input.length) {

value += char;

char = input[++current];

}

tokens.push({ type: 'SELECTOR', value, line });

continue;

}

if (char === ':') {

tokens.push({ type: 'COLON', value: char, line });

current++;

continue;

}

if (char === ';') {

tokens.push({ type: 'SEMICOLON', value: char, line });

current++;

continue;

}

if (char === '{') {

tokens.push({ type: 'LEFT_BRACE', value: char, line });

current++;

continue;

}

if (char === '}') {

tokens.push({ type: 'RIGHT_BRACE', value: char, line });

current++;

continue;

}

throw new Error(`Unexpected character "${char}" at line ${line}`);

}

return tokens;

}

Now, each token includes a line number, which helps when reporting errors.

Step 2: Validating Syntax in the Parser

Next, we modify parser.js to detect errors before they cause issues:

function parse(tokens) {

let current = 0;

function parseRule() {

let selectorToken = tokens[current];

if (selectorToken.type !== 'SELECTOR') {

throw new Error(`Expected a selector at line ${selectorToken.line}, but found ${selectorToken.type}`);

}

let rule = {

type: 'Rule',

selector: selectorToken.value,

declarations: []

};

current++; // Move past selector

if (tokens[current].type !== 'LEFT_BRACE') {

throw new Error(`Expected "{" after selector at line ${tokens[current].line}`);

}

current++; // Move past {

while (tokens[current].type !== 'RIGHT_BRACE') {

rule.declarations.push(parseDeclaration());

}

current++; // Move past }

return rule;

}

function parseDeclaration() {

let propertyToken = tokens[current];

if (propertyToken.type !== 'PROPERTY') {

throw new Error(`Expected property name at line ${propertyToken.line}, but found ${propertyToken.type}`);

}

let declaration = {

property: propertyToken.value

};

current++; // Move past property

if (tokens[current].type !== 'COLON') {

throw new Error(`Missing ":" after property "${declaration.property}" at line ${tokens[current].line}`);

}

current++; // Move past :

let valueToken = tokens[current];

if (valueToken.type !== 'VALUE') {

throw new Error(`Expected value for property "${declaration.property}" at line ${tokens[current].line}`);

}

declaration.value = valueToken.value;

current++; // Move past value

if (tokens[current].type !== 'SEMICOLON') {

throw new Error(`Expected ";" after value "${declaration.value}" at line ${tokens[current].line}`);

}

current++; // Move past ;

return declaration;

}

function parseStylesheet() {

let stylesheet = {

type: 'Stylesheet',

rules: []

};

while (current < tokens.length) {

stylesheet.rules.push(parseRule());

}

return stylesheet;

}

return parseStylesheet();

}

Step 3: Handling Invalid Tokens

If the lexer encounters an unexpected character, it now throws an error with the line number:

throw new Error(`Unexpected character "${char}" at line ${line}`);The parser also reports missing colons, semicolons, or braces, ensuring that CSS is properly structured.

Step 4: Writing Tests for Error Handling

Now, let’s write test cases in tests/parser.test.js to confirm that errors are properly detected:

const parse = require('../src/parser');

const tokenize = require('../src/lexer');

test('Throws error for missing colon', () => {

const input = 'body { color black; }';

const tokens = tokenize(input);

expect(() => parse(tokens)).toThrow('Missing ":" after property "color" at line 1');

});

test('Throws error for missing semicolon', () => {

const input = 'body { color: black }';

const tokens = tokenize(input);

expect(() => parse(tokens)).toThrow('Expected ";" after value "black" at line 1');

});

test('Throws error for unexpected character', () => {

const input = 'body $color: black;';

expect(() => tokenize(input)).toThrow('Unexpected character "$" at line 1');

});

Run the tests:

npm testIf everything is correct, the tests should pass, ensuring our error handling works as expected.

Good error handling makes a parser more robust and helps developers identify mistakes quickly. Without validation, incorrect CSS could go unnoticed, leading to incorrect styles or broken rendering.

Next we can test the performance and optimize it for real-world use.

Testing the CSS Parser

A parser is only as good as its ability to handle real-world CSS. Now that we have built the lexer, parser, and error handling, we need to ensure that everything works correctly. Testing validates that the parser correctly processes CSS, detects errors, and performs efficiently.

Writing Unit Tests

Unit tests verify that each component functions as expected. Using Jest, we can test various CSS inputs, covering valid rules, invalid syntax, nested structures, and media queries.

Create tests/parser.test.js and write the following test cases:

const parse = require('../src/parser');

const tokenize = require('../src/lexer');

test('Parses a basic CSS rule', () => {

const input = 'body { color: black; }';

const tokens = tokenize(input);

const ast = parse(tokens);

expect(ast).toEqual({

type: 'Stylesheet',

rules: [

{

type: 'Rule',

selector: 'body',

declarations: [{ property: 'color', value: 'black' }]

}

]

});

});

test('Parses nested rules correctly', () => {

const input = '.container { color: black; .child { color: blue; } }';

const tokens = tokenize(input);

const ast = parse(tokens);

expect(ast.rules[0].children.length).toBe(1);

expect(ast.rules[0].children[0].selector).toBe('.child');

});

test('Parses media queries correctly', () => {

const input = '@media (max-width: 600px) { body { color: gray; } }';

const tokens = tokenize(input);

const ast = parse(tokens);

expect(ast.rules[0].type).toBe('MediaQuery');

expect(ast.rules[0].condition).toBe('(max-width: 600px)');

expect(ast.rules[0].rules.length).toBe(1);

});

Run the tests using:

npm testIf everything is working, the tests should pass.

Performance Testing

A parser should handle large CSS files without slowing down. To measure performance, we can use Node.js performance hooks:

const { performance } = require('perf_hooks');

const tokenize = require('../src/lexer');

const parse = require('../src/parser');

const largeCSS = '.container { color: black; }'.repeat(1000);

const start = performance.now();

const tokens = tokenize(largeCSS);

const ast = parse(tokens);

const end = performance.now();

console.log(`Parsing time: ${end - start}ms`);If parsing takes too long, optimizations may be required, such as reducing redundant loops or optimizing string operations.

Now that the parser is fully tested, we can move on to optimizing its performance for better speed and scalability.

Integrating with Existing Tools

Now that we have a fully functional CSS parser, the next step is to integrate it with existing tools. A CSS parser becomes more useful when it can interact with linters, preprocessors, optimizers, or even custom development pipelines. By integrating it with existing workflows, we can analyze, transform, or optimize CSS dynamically.

Using the Parser in a Linter

A linter checks CSS code for errors, style inconsistencies, or best practices violations. Our parser can power a simple linter by analyzing the Abstract Syntax Tree (AST) and flagging problematic styles.

Example: Enforcing Consistent Color Usage

Let’s create a basic linter that detects the use of black instead of a predefined variable or hex code.

Create src/linter.js:

function lintAST(ast) {

let warnings = [];

function checkRule(rule) {

rule.declarations.forEach(declaration => {

if (declaration.property === 'color' && declaration.value === 'black') {

warnings.push(`Avoid using 'black' directly in "${rule.selector}". Use a variable or hex code instead.`);

}

});

if (rule.children) {

rule.children.forEach(checkRule);

}

}

ast.rules.forEach(checkRule);

return warnings;

}

module.exports = lintAST;

Now, let’s test it by integrating it with our parser. Create tests/linter.test.js:

const lintAST = require('../src/linter');

const tokenize = require('../src/lexer');

const parse = require('../src/parser');

test('Detects black color usage', () => {

const input = 'body { color: black; }';

const tokens = tokenize(input);

const ast = parse(tokens);

const warnings = lintAST(ast);

expect(warnings.length).toBe(1);

expect(warnings[0]).toContain("Avoid using 'black'");

});

Run the test using:

npm testIf everything is working, it should detect the issue.

Transforming CSS for Preprocessing

Many preprocessors allow features like nested rules, mixins, and variables, which are then compiled into standard CSS. Our parser can be integrated into a custom preprocessor to support such transformations.

For example, to flatten nested rules into standard CSS, modify src/transformer.js:

function flattenAST(ast) {

let flatRules = [];

function processRule(rule, parentSelector = '') {

let fullSelector = parentSelector ? `${parentSelector} ${rule.selector}` : rule.selector;

flatRules.push({

selector: fullSelector,

declarations: rule.declarations

});

rule.children?.forEach(child => processRule(child, fullSelector));

}

ast.rules.forEach(processRule);

return flatRules;

}

module.exports = flattenAST;

If given this SCSS-like input:

.container {

color: black;

.child {

color: blue;

}

}

It transforms into:

.container {

color: black;

}

.container .child {

color: blue;

}

This allows us to build a preprocessor that compiles enhanced CSS features into standard CSS.

Optimizing Stylesheets

A CSS optimizer removes unnecessary styles, compresses values, and minifies the output. We can integrate our parser with a minifier by stripping whitespace and shortening color values.

Modify src/minifier.js:

function minifyAST(ast) {

return ast.rules.map(rule => {

let minifiedRule = `${rule.selector}{`;

rule.declarations.forEach(declaration => {

minifiedRule += `${declaration.property}:${declaration.value};`;

});

minifiedRule += '}';

return minifiedRule;

}).join('');

}

module.exports = minifyAST;

If given:

body {

color: black;

font-size: 16px;

}

It outputs:

body{color:black;font-size:16px;}This optimization significantly reduces CSS file size.

Exposing the Parser as an API

For broader usability, we can expose the parser as an API to process CSS dynamically. Using Express.js, we can create a simple API that accepts CSS input, parses it, and returns the AST.

Create server.js:

const express = require('express');

const bodyParser = require('body-parser');

const tokenize = require('./src/lexer');

const parse = require('./src/parser');

const app = express();

app.use(bodyParser.text());

app.post('/parse', (req, res) => {

try {

const tokens = tokenize(req.body);

const ast = parse(tokens);

res.json(ast);

} catch (error) {

res.status(400).json({ error: error.message });

}

});

app.listen(3000, () => console.log('CSS Parser API running on port 3000'));

Now, running:

curl -X POST http://localhost:3000/parse -d "body { color: black; }"Returns the AST as a JSON response.

By integrating our CSS parser with linters, preprocessors, minifiers, and APIs, we extend its capabilities beyond simple parsing. This allows for real-world applications like enforcing coding standards, transforming stylesheets, optimizing CSS, and providing dynamic analysis tools. Now that the parser is fully functional, the next step is to explore performance optimizations for better speed and scalability.

Conclusion

Building a CSS parser from scratch provides deep insights into how CSS is structured, processed, and optimized. We started by breaking down raw CSS into tokens using a lexer, then transformed those tokens into a structured Abstract Syntax Tree (AST) with a parser. From handling nested rules and media queries to error validation, we ensured our parser could process real-world CSS efficiently.

Beyond parsing, we integrated it with linters, preprocessors, and minifiers, making it a practical tool for enforcing CSS standards and optimizing stylesheets. We also exposed it as an API, enabling dynamic CSS processing in external applications.

A well-built parser is more than just a tool—it enhances performance, maintainability, and consistency in styling workflows. Whether you’re automating CSS analysis or building a custom preprocessor, understanding CSS parsing gives you complete control over how styles are interpreted and applied.

People Also Ask For:

1. Why would I need to build a custom CSS parser?

A custom CSS parser allows you to analyze, transform, and optimize styles beyond what standard CSS tools provide. It’s useful for building custom linters, preprocessors, minifiers, or automation workflows that require direct manipulation of CSS.

2. How is a lexer different from a parser?

A lexer (tokenizer) breaks raw CSS code into small meaningful units called tokens. The parser takes those tokens and organizes them into a structured Abstract Syntax Tree (AST), making it possible to analyze and modify CSS programmatically.

3. Can this parser handle nested CSS like SCSS?

Yes. The parser was designed to support nested rules by processing child selectors inside parent blocks. It can also handle media queries, allowing it to work with preprocessor-like syntax before converting it into standard CSS.

4. How can I optimize this parser for large CSS files?

For better performance, consider lazy parsing (only parsing needed sections), caching parsed results, and reducing string operations in the lexer and parser. Running benchmark tests can also help identify bottlenecks.

5. How can I integrate this parser into an existing project?

You can integrate the parser into linters, CSS optimizers, preprocessors, and automation scripts. It can also be exposed as an API using a framework like Express.js to process CSS dynamically.