Today, in a rapidly shifting landscape, making decisions based on competitive insight is in demand. From monitoring and logging to complex, multi-criteria recommendations, businesses are increasingly relying on real-time data processing to perform a variety of core activities. Introducing Apache Flink: the battle-tested framework designed for processing streams of events in real time.

This tutorial will guide you to create a basic real-time data analytics engine using Apache Flink — with no code at all. No matter your level, whether you are an advanced user or a complete beginner you will learn you must-know facts about how it works and how it’s applicable in the real world.

What is Apache Flink?

Apache Flink is an open-source framework designed for processing data streams in real-time. Unlike other traditional batch processing frameworks, where you will be processing a known static dataset, Flink can process the incoming data immediately and provide insights in real time.

Apache Flink is used by 2,117 companies in the United States, with 928 companies in the Information Technology and Services industry. It is primarily used by businesses of 50-200 employees and those with revenues of more than $1,000 million.

Why is this important? Well, consider that you’re checking online transactions for the potential of fraud. You don’t want to be waiting until the next day to see if fraud is happening; you want to stop it as it occurs. This is the kind of use case that can save companies millions with real-time analytics — and Flink is built to process stuff like this effortlessly.

Apache Flink as a perfect choice for streaming data with low latency and high throughput ensures that it offers real-time anomaly detection, so that businesses are able to respond in real-time. That means financial institutions can add a warning sign about dubious transactions prior to actual completion, lowering the probability of fraud.

Moreover, e-commerce platforms can adapt user experiences dynamically—providing recommendations gleaned not only from past interactions, but also real-time behavior and interests. Scalable to many exabytes, Flink’s distributed architecture provides a low-latency, high-throughput data processing engine. This can be accomplished with the help of Flink for decisions grounded in data as they happen, not in hindsight; providing a competitive advantage to the enterprise.

Comparing Flink to Other Tools

Let’s break down how Flink compares to other essential tools.

Flink and Spark Streaming

Apache Spark is primarily known for its batch processing capabilities, but it also supports streaming in the form of Spark Streaming. But relatively speaking, in the perspective of true low-latency processing, Flink wins.

- Latency: Although Spark is designed mostly for micro-batch processing, in the case of Flink, event-by-event processing will work better for applications like Fraud Detection.

- Stateful stream processing: Indeed, Flink is quite good at handling state except it keeps track of the streams of data. That will be key in tracking the various interactions done by users and in the topologies that appear. While Spark does provide for state, its architecture is not as optimal for complex, real-time jobs as is Flink.

Flink vs Kafka Streams

Kafka Streams works well for real-time processing but coupled tightly with Kafka. Flink in its turn is good to use if you are utilizing different sources of data.

- Integration with data sources: While Kafka Streams only cares about Kafka topics as the input stream, Flink can deal with different input sources like Kafka, RabbitMQ, Kinesis, etc. That makes Flink even more suitable for complex data pipelines.

- Processing models: Generally, Kafka Streams performs great for direct and easy operations but Flink provides a wide range of operations including a Complex Event Processing (CEP), stream joins, and scales for larger without being taken to enterprise-level analytics.

Flink strengths

- Exactly-once semantics: Flink provides the exactly-once processing guarantee, which is important if you are calculating real-time metrics from stream data or running streaming transactions.

- Unified batch and stream processing: Flink provides one unified API for both batch and stream jobs, and therefore provides a simplified development experience.

Spark Streaming, Kafka Streams — the big guns with guaranteed results; for most mission-critical and real-time use cases that require responsiveness and more features, Apache Flink once again stands at the top of the web application architecture game for responsive user interactions.

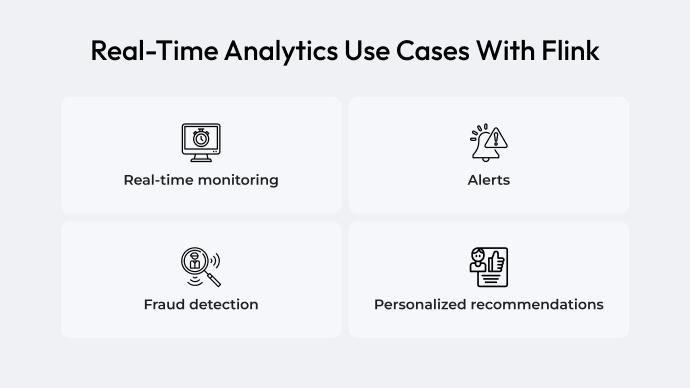

Example Use Cases of Flink-Based Real-Time Analytics

Flink’s ability to deliver real-time insights has seen it sweep through industries. The use cases can be interpreted in some of the ways below:

Live monitoring and alerts

It allows e-commerce sites running on Flink to monitor the traffic and server performance. If something goes wrong — like a sudden dip in sales or increase in page load time — Flink can set off alerts in real time and enable teams to mitigate issues before they snowball into larger problems.

Fraud detection

Banks can make use of Flink to analyze various types of transaction data streams. This can be achieved by applying various machine learning algorithms using Flink on near real-time data to identify trends suggestive of an opportunity for fraud. Thus, this approach helps prevent financial losses and improves security.

Personalized recommendations

It is widely used by companies like Netflix in analyzing the behaviour of users to provide personalized recommendations. If you have recently watched a Sci-Fi film, for instance, the action is processed in Flink and, bam, it immediately recommends similar titles — there it is, all without you needing to lift a finger.

Setting Up Apache Flink

You’d be surprised how easy it is to get started with Flink. Moreover, you can scale from your local machines to different cloud environments from AWS to Azure. Apache Flink is a solid framework for both developers and data engineers looking to get hands on with their analytics.

Here are the key bits you’ll find along the way:

- JobManager: Responsible for the execution of your Flink jobs.

- TaskManager: The module that handles the tasks sent by JobManager.

- APIs: Fink gives below two very vital APIs, such as DataStream API for actual-time processing and the DataSet API for batch jobs. Our focus is on real-time data, so in this mission, our exile is DataStream API.

Most importantly, Apache Flink framework offers complete real-time processing of incoming big data and enables easier access to it through which a developer builds the needed scalable robustness on top of it, garnering needed insights which enables real-time decision making.

Key Concepts of Real-Time Data Analytics in Flink

Flink enables continuous and thus potentially infinite streaming of data at once. It is a fundamental aspect of modern web application architecture, allowing businesses to process large-flux data quickly, along with low-latency and scalability. This enables companies to generate on-time insights that improve the experiences of users. Let’s get into its core concepts:

Streaming input and output data transformations

Data flows in Flink and operations like map, filter, and aggregate can be applied to discover useful insights. For example, with each new reading Flink can instantly detect deviations from the normal and calculate averages ensuring your reports are always up to date.

Billing and time concepts

Flink is very good at handling both event time (the time that an event happened, e.g. a user clicking a button) and processing time, when Flink handles the event.

This separation is vital to real-time analytics. In other words, processing clickstream data from a worldwide audience makes use of event time to make sure that user clicks are received in the proper order — regardless of whether some clicks get delayed over the network.

Windowing in Flink

Real-time processing usually necessitates splitting data into smaller, more manageable pieces, or windows. For instance, when tracking website traffic, one of the processes used could be counting how many users are visiting per minute. Flink supports the time-windowing feature, if you work with time series data, it’s a bootstrapping feature to aggregate data and analyze all trends possible.

A Guide To Event Streaming Analytics With Flink

Now, let’s go through a typical of a real-time analytics workflow in Flink:

- Define the problem. Now consider looking at user activity logs in order to spot spikes in traffic.

- Data ingestion. Flink is capable of ingesting data from various data sources like Apache Kafka, RabbitMQ, or relational databases. For instance, you can stream user activity logs in real time from Kafka.

- Processing the data. Flink processes the data after it gets ingested. You might filter useless logs, group user activity per region, or identify sudden traffic spikes.

- Output the results. You can find processed results on dashboards, databases, or alert systems. Flink, for example, can alert your team on Slack of a traffic spike.

Frequently Encountered Challenges and Their Solutions

Latency issues. Data delays on the network or heavy data create delays for real-time processing. Keep a good user experience by utilizing tuned window sizes and Flink’s backpressure handling.

Data skew. This can create an imbalance among tasks that can lead to some nodes having an overload of tasks. Take advantage of Flink’s partitioning capabilities for TaskManagers to spread the workload evenly across them.

Fault tolerance. Flink fault tolerance still causes problems. Establish latch points for persisting application states, and fine-tune intervals for optimal performance vs. reliability.

Scalability challenges. As more data emerged, the importance of scalability became salient. By adding TaskManagers, Flink supports horizontal scaling almost effortlessly. Leverage Flink’s metrics and dashboards to inform your scaling decisions.

Wrapping Up

Flink is a powerful tool for performing real-time data analytics. Its processing of unbounded data streams with minimal latency also makes the framework a great entrepreneur answer. With its fault-tolerant design, Flink guarantees reliability even in large-scale distributed applications. It can be embedded into an existing web application architecture convenient to the enterprises to enrich real-time decision-making capabilities of multiple domains. Flink is only getting stronger at processing complex streaming workloads efficiently, which bodes well for our data-driven apps going forward.

Want to learn more? Explore Flink’s documentation and online tutorials to develop your own real-time analytics applications.