The announcement of BARD by Sundar Pichai, CEO of Google, has been met with great excitement in the technology industry. As one of the leaders in AI research and development, Google’s latest offering has been eagerly anticipated. BARD represents a significant step forward in the field of search technology, offering users a more accurate and relevant search experience. With its advanced machine learning techniques and ability to understand the context and meaning of search queries, B-A-R-D is set to compete with the best in the field of AI search. The integration of B-A-R-D into Google’s search engine marks a new era in search technology, one that promises to bring users the answers they are looking for, faster and more accurately than ever before.

Traditionally, search engines have relied on keyword-based algorithms to match user queries to relevant web pages. However, these algorithms have several limitations that can lead to less-than-ideal results. They often struggle to understand the true intent behind a query, resulting in irrelevant or incomplete results. This is where Google’s BARD AI comes in. By using advanced machine learning techniques, this AI is able to analyze and understand the relationships between words in a query and in web pages, making it better at matching queries to relevant results.

In this blog, we will be exploring the problem with traditional search engines, how BARD works, and the advantages it offers over traditional search engines. We will also discuss the integration of B-A-R-D into Google’s search engine and how it is available to users worldwide. So, without further ado, let’s dive into the world of B-A-R-D and see how it is revolutionizing the way we search the web!

What is B-A-R-D?

BARD, or Bidirectional Encoder Representations from Transformers, is a new AI search system developed by Google AI. It uses advanced machine learning techniques to analyze and understand the relationships between words in a query and in web pages. This allows BARD to better match user queries to relevant search results, providing users with a more accurate and relevant search experience.

BARD works by using bidirectional encoder representations to capture the context of words in a query and the web pages being searched. This allows B-A-R-D to understand the meaning and intent behind a query, making it better equipped to match queries to relevant results. The system also uses transformers, a type of deep learning model, to analyze and process the relationships between words in a query and in web pages. By combining these two technologies, BARD is able to provide users with a more effective and efficient search experience.

BARD integrates cutting-edge deep learning algorithms, including the LaMDA model, to provide users with a more personalized and efficient search experience. The LaMDA model, which stands for Language Model for Dialogue Applications, is a cutting-edge language generation model that has been developed by Google.

With the integration of LaMDA, BARD is able to generate human-like responses to a wide range of questions, making it a powerful tool for search engines. LaMDA is trained on a massive corpus of text, allowing it to understand and generate responses to a wide range of questions and topics. This makes it possible for BARD to provide users with more relevant and accurate search results, regardless of the complexity of their search queries.

With its sophisticated language generation capabilities, BARD also includes advanced deep learning algorithms that are specifically designed to improve search results. These algorithms work by analyzing and understanding the intent behind each search query, and then presenting users with the most relevant results. With its advanced AI algorithms, BARD is able to provide users with more accurate, personalized, and efficient search results, making it a valuable tool for anyone who uses Google to search for information online.

What is LaMDA?

LaMDA (Language Model for Dialogue Applications) is a recent[2021] language model developed by Google AI. LaMDA is designed to generate coherent and context-aware responses in natural language in various scenarios, including dialogue systems and conversational agents.

LaMDA is based on the transformer architecture, which is a deep learning model that has achieved state-of-the-art performance in various NLP tasks, such as sentiment analysis, machine translation, and question answering. LaMDA is pre-trained on a massive corpus of diverse text from the internet, which includes over 5 million passages of text and covers a broad range of topics, from news articles to fiction stories.

One of the key features of LaMDA is its ability to generate context-aware responses. LaMDA uses a powerful encoder to process and understand the context of the input text, and generates a response based on that context. This enables LaMDA to generate responses that are coherent and relevant to the input text, regardless of the topic or genre.

Another feature of LaMDA is its ability to generate responses in multiple modalities. LaMDA can generate text-based responses, but it can also generate responses in the form of images, sound, and even video. This opens up new possibilities for conversational AI applications, such as chatbots that can generate dynamic images and videos to complement their responses.

LaMDA is also highly flexible and can be fine-tuned for specific applications. This means that developers can use LaMDA as a base model and train it on their own data to generate domain-specific responses. For example, a travel company could fine-tune LaMDA on travel-related data to create a chatbot that can answer questions about travel destinations, flights, and hotel reservations.

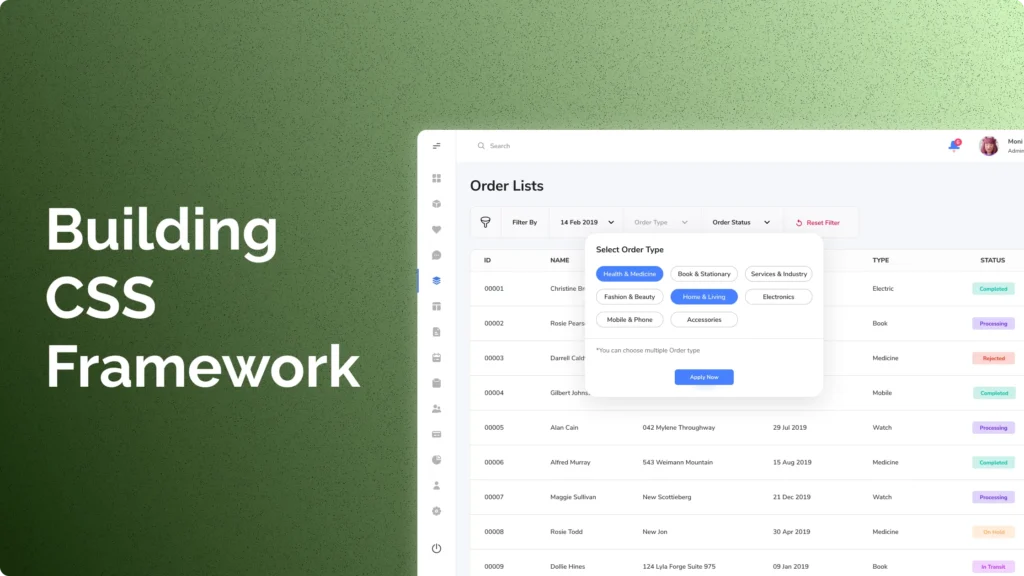

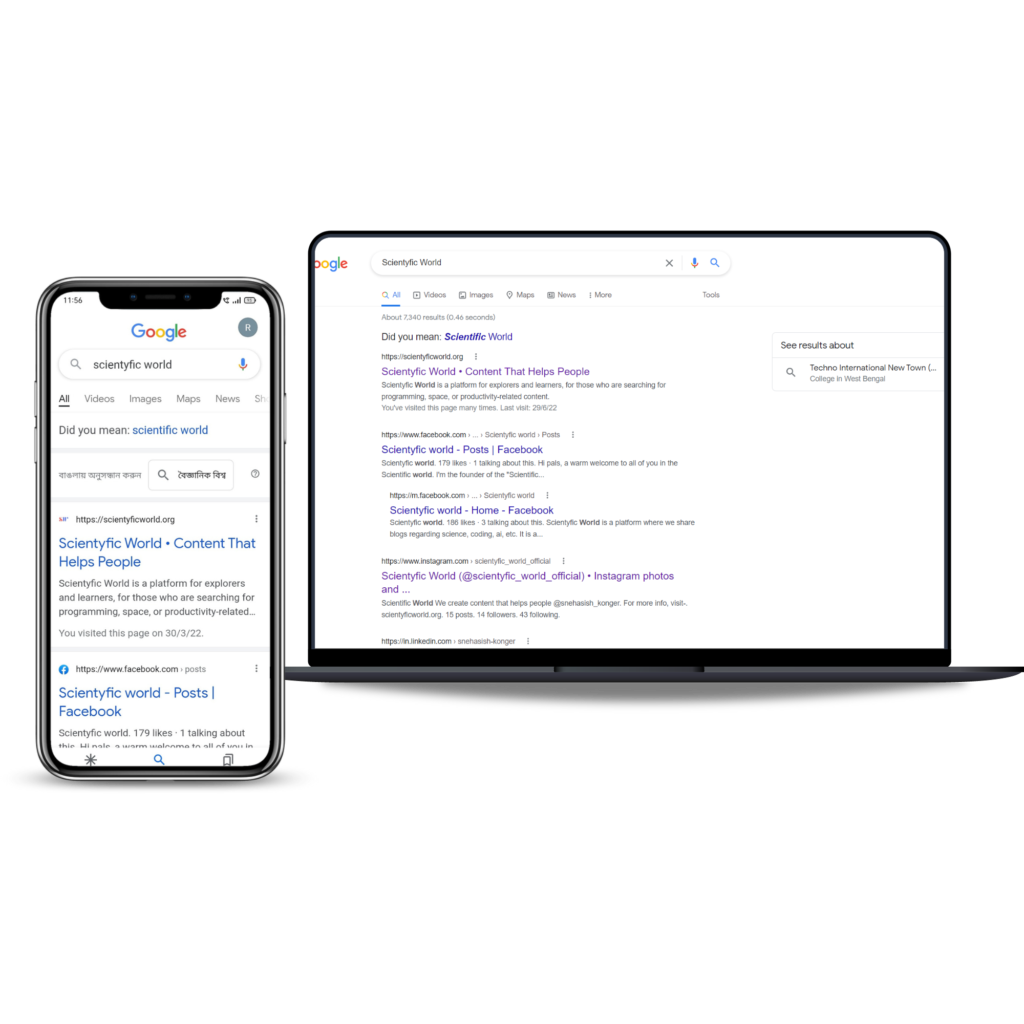

How to get access to BARD?

To get access to BARD, users simply need to use the Google search engine as they normally would. The advanced search capabilities of BARD are integrated seamlessly into the existing Google search engine, meaning that users will not have to navigate a new search system or learn a new interface.

Additionally, BARD’s deep learning algorithms are automatically applied to all search queries, meaning that users will receive the benefits of BARD’s advanced search capabilities without having to take any additional steps.

For users who are interested in learning more about BARD and its capabilities, Google has provided a range of resources, including tutorials, FAQs, and user guides, that are available on its website.

Overall, getting access to BARD is simple and straightforward. By using the Google search engine, users can now enjoy the benefits of a more personalized, efficient, and accurate search experience.

The problem with traditional search engines:

Basically, there are two problems with the traditional search engines, these are as followed-

- Limitations of keyword-based search algorithms:

Traditional search engines use keyword-based algorithms to match user queries to relevant web pages. While these algorithms have been effective in the past, they have several limitations that can lead to less-than-ideal results. These algorithms often struggle to understand the true intent behind a query, resulting in irrelevant or incomplete results. For example, if a user searches for “best Italian restaurant,” they may receive results for Italian restaurants that are not necessarily the best.

Additionally, keyword-based algorithms can struggle to understand the relationships between words in a query. For example, if a user searches for “best Italian restaurant near me,” the keyword-based algorithm may only focus on the words “best” and “Italian restaurant,” resulting in irrelevant results.

- The need for a more context-aware search engine:

To address the limitations of keyword-based algorithms, there is a growing need for a more context-aware search engine. A context-aware search engine would better understand the meaning and intent behind a query, providing users with more accurate and relevant results. By analyzing the relationships between words in a query and in web pages, a context-aware search engine can provide users with a more effective and efficient search experience.

This is where BARD AI comes in. By using advanced machine learning techniques to understand the context and meaning of search queries, this AI is able to provide users with a more accurate and relevant search experience. In the next section, we will explore how B-A-R-D works and the advantages it offers over traditional search engines.

How BARD works?

BARD uses a combination of bidirectional encoder representations and transformers to analyze and understand the relationships between words in a search query and in web pages. This advanced combination of deep learning techniques allows B-A-R-D to better understand the context and meaning behind a query, resulting in more accurate and relevant search results for users. In this section, we will delve into how these technologies work together to bring a new level of context-awareness to search engines.

- Bidirectional Encoder Representations:

B-A-R-D uses bidirectional encoder representations to capture the context of words in a query and in web pages. This allows B-A-R-D to understand the meaning and intent behind a query, making it better equipped to match queries to relevant results. Bidirectional encoder representations are a type of deep learning model that analyze the relationships between words in a sequence. By processing both the forward and backward sequences of words, bidirectional encoder representations are able to capture the context of words in a query and in web pages, making it possible for BARD to understand the meaning and intent behind a query. - Transformers:

B-A-R-D also uses transformers, a type of deep learning model, to analyze and process the relationships between words in a query and in web pages. Transformers have been proven to be highly effective in natural language processing tasks, such as machine translation and text classification. By using transformers, BARD is able to better understand the relationships between words in a query and in web pages, making it possible to provide users with more accurate and relevant search results. - Combining Bidirectional Encoder Representations and Transformers:

By combining bidirectional encoder representations and transformers, B-A-R-D is able to provide users with a more effective and efficient search experience. The bidirectional encoder representations capture the context and meaning of a query, while the transformers analyze and process the relationships between words in a query and in web pages. This combination of technologies allows B-A-R-D to better understand the intent behind a query and match it to relevant search results.

How it works in backend?

Google Bard is powered by the LaMDA language model. LaMDA is a neural network model that is trained on a massive dataset of text and code. This dataset includes books, articles, code, and other forms of text. LaMDA can understand and generate text in a natural, conversational way.

In addition to LaMDA, Google Bard also uses the Knowledge Graph. The Knowledge Graph is a database of facts and information about the world. This database includes information about people, places, things, and events. Google Bard uses the Knowledge Graph to provide context for your questions and to generate more accurate and informative responses.

Google Bard is also able to answer NORA questions. NORA stands for “No One Right Answer.” These are questions that have multiple possible answers, or subjective answers. For example, the question “What is the best movie of all time?” is a NORA question. Google Bard can generate different responses to NORA questions, depending on the context and the user’s preferences.

The response generation process for Google Bard is complex and involves several steps. First, the LaMDA language model tries to understand your question. It does this by analyzing the words in your question, the context of your question, and the Knowledge Graph. Once LaMDA has a good understanding of your question, it generates a response. This response is then evaluated by several metrics, such as accuracy, informativeness, and fluency. If the response is not good enough, LaMDA will try again.

Here is a more detailed look at each of these steps:

1. Understanding your question

The first step in the response generation process is for LaMDA to understand your question. LaMDA does this by analyzing the words in your question, the context of your question, and the Knowledge Graph.

The words in your question are important because they provide LaMDA with clues about the meaning of your question. For example, if you ask the question “What is the capital of France?”, LaMDA will know that you are asking for the name of the city that is the capital of France.

The context of your question is also important because it can provide LaMDA with additional information about what you are asking. For example, if you ask the question “What is the capital of France?” in a conversation about European countries, LaMDA will know that you are probably asking about the capital of the country of France, not the capital of the continent of Europe.

The Knowledge Graph is a database of facts and information about the world. LaMDA uses the Knowledge Graph to provide context for your questions and to generate more accurate and informative responses. For example, if you ask the question “What is the capital of France?”, LaMDA will use the Knowledge Graph to learn that the capital of France is Paris.

2. Generating a response

Once LaMDA has a good understanding of your question, it will generate a response. The response is generated by a neural network model that is trained on a massive dataset of text and code.

- The neural network model takes the following inputs:

- The words in your question

- The context of your question

- The Knowledge Graph

The neural network model then generates a response. The response is a piece of text that is generated in a natural, conversational way.

3. Evaluating the response

The response generated by the neural network model is then evaluated by several metrics, such as accuracy, informativeness, and fluency. If the response is not good enough, LaMDA will try again.

The accuracy of the response is measured by how well it answers your question. The informativeness of the response is measured by how much new information it provides. The fluency of the response is measured by how natural and easy it is to read.

If the response is not accurate, informative, or fluent, LaMDA will try again. LaMDA will continue to try until it generates a response that meets the required standards.

In the next section, we will explore the advantages of BARD over traditional search engines and discuss how it is being integrated into Google’s search engine.

Advantages:

BARD offers several advantages over traditional search engines, making it a more effective and efficient search solution for users. By using advanced deep learning techniques to understand the context and meaning of search queries, B-A-R-D is able to provide users with more accurate and relevant results. In this section, we will explore some of the key advantages of this AI over traditional search engines.

A. Improved search accuracy:

Improved search results is a key benefit of integrating B-A-R-D into Google’s search engine. BARD’s deep learning algorithms enable it to understand the context and meaning of search queries, allowing Google to match users with the information they need more accurately and effectively. This results in a higher percentage of accurate search results, which means users will be able to find the information they need more quickly and easily.

For example, if a user searches for “best Italian restaurants in NYC”, BARD’s ability to understand the context of the query means that it can provide results that are specific to Italian restaurants in New York City, rather than just any Italian restaurants. This leads to a more relevant and focused search experience for the user, who will be able to find the information they need more easily.

Overall, the integration of B-A-R-D into Google’s search engine will result in a more accurate and effective search experience for users, as B-A-R-D’s advanced deep learning algorithms enable it to provide users with results that are more closely aligned with their expectations.

B. More relevant results:

BARD also provides users with more relevant results than traditional search engines. It’s deep learning algorithms allow it to understand the context and meaning of search queries, enabling it to provide users with results that are more relevant and focused.

For example, if a user searches for “best Italian restaurants in NYC”, it’s ability to understand the context of the query means that it can provide results that are specific to Italian restaurants in New York City, rather than just any Italian restaurants. This leads to a more relevant and focused search experience for the user, who will be able to find the information they need more easily.

Additionally, it’s deep learning algorithms also enable it to provide users with results that are tailored to their individual needs and preferences. This means that users will receive results that are more closely aligned with their expectations, resulting in a more personalized search experience.

C. Enhanced user experience:

By providing users with more relevant and accurate search results, B-A-R-D enhances the user experience by allowing users to find the information they need more easily and quickly.

Additionally, it’s advanced deep learning algorithms also enable it to provide users with a more personalized search experience. This means that users will receive results that are tailored to their individual needs and preferences, resulting in a more enjoyable and efficient search experience.

Moreover, the integration of B-A-R-D into Google’s search engine also means that users will benefit from the user-friendly interface and intuitive design that Google is known for. It’s advanced search capabilities are integrated seamlessly into the existing Google search engine, meaning that users will not have to learn a new interface or navigate a new search system.

Bard vs ChatGPT:

| Criteria | BARD AI | ChatGPT |

|---|---|---|

| Purpose | To provide natural, conversational responses to user queries | To generate human-like text based on given prompts |

| Language Model | LaMDA, a neural network model trained on a massive dataset of text and code, designed for natural language conversation | GPT, a transformer-based neural network model, designed for text generation |

| Dataset | A dataset of books, articles, code, and other forms of text | A large dataset of internet text and other publicly available text |

| Knowledge Graph | Uses a Knowledge Graph to provide context for user queries and generate more accurate and informative responses | Does not rely on a Knowledge Graph |

| NORA questions | Can generate different responses to NORA questions, depending on the context and user’s preferences | Can generate multiple possible responses to NORA questions |

| Response quality | Evaluates responses based on accuracy, informativeness, and fluency, and retries until a satisfactory response is generated | Produces high-quality responses based on the input prompt and the quality of the dataset |

| User interface | Can be integrated with Google Assistant and other Google products to provide conversational responses | Can be integrated with a chatbot or used in other text-based applications |

| Domain-specific | Can be trained and customized for specific domains | Can be trained and customized for specific domains |

| Open source | Not open source | OpenAI provides pre-trained models as well as access to its API for further training |

| Availability | Currently in development and not widely available | Available for use by developers and researchers |

Overall, both BARD AI and ChatGPT are powerful language models that can generate human-like text. However, BARD AI is specifically designed for natural language conversation and can generate more accurate and informative responses by using a Knowledge Graph. In contrast, ChatGPT is designed for text generation and can generate multiple possible responses to NORA questions. Both models can be trained and customized for specific domains, and while ChatGPT is available for use by developers and researchers, BARD AI is currently in development and not widely available.

Integration into Google’s search engine

Google is integrating BARD into its search engine in order to provide users with an improved search experience. It’s advanced deep learning techniques will complement Google’s existing search algorithms, enhancing the overall search experience for users. In this section, we will discuss how B-A-R-D is being integrated into Google’s search engine and how it will enhance the overall search experience for users.

Improved search results:

With the integration of B-A-R-D AI, Google’s search engine is able to understand the context and meaning of search queries, allowing it to provide users with more accurate and relevant results. For example, if a user searches for “best Italian restaurants in NYC”, It’s deep learning algorithms allow the search engine to understand that the user is searching for Italian restaurants specifically in New York City, rather than just any Italian restaurant.

BARD’s deep learning algorithms also enable the search engine to provide users with more personalized results that are tailored to their individual needs and preferences. This means that users will receive results that are more closely aligned with their expectations, leading to a more efficient and effective search experience.

Enhanced user experience:

It’s integration into Google’s search engine enhances the overall user experience by providing users with a more personalized and efficient search experience. By using advanced deep learning algorithms, BARD is able to understand the context and meaning of search queries, allowing it to provide users with more relevant results that are tailored to their individual needs and preferences.

Additionally, BARD’s integration into Google’s search engine also means that users will benefit from the user-friendly interface and intuitive design that Google is known for. The advanced search capabilities of B-A-R-D are integrated seamlessly into the existing Google search engine, meaning that users will not have to navigate a new search system or learn a new interface.

This enhanced user experience is also achieved through the ability of B-A-R-D to provide users with more accurate search results. By providing users with results that are more closely aligned with their expectations, B-A-R-D reduces the amount of time and effort required for users to find the information they need.

Overall, the integration of B-A-R-D into Google’s search engine enhances the user experience by providing users with a more personalized, efficient, and accurate search experience. By using advanced deep learning algorithms, and integrating its capabilities seamlessly into the existing Google search engine, BARD AI provides users with a more enjoyable and effective search experience.

Continued innovation:

Google is committed to investing in the ongoing development of B-A-R-D, in order to ensure that it continues to provide users with the most advanced and effective search experience possible. This includes the development of new algorithms, the integration of new data sources, and the implementation of new technologies.

Additionally, Google also plans to continue to collaborate with industry experts and leading researchers in the field of AI in order to drive continued innovation and advancements in B-A-R-D and its integration into Google’s search engine.

With ongoing investment and collaboration, B-A-R-D will continue to provide users with the most advanced and effective search experience possible, and will drive continued innovation in the field of AI and search technology.

Conclusion

In conclusion, BARD is a revolutionary new search technology that brings a new level of context-awareness to search engines. By using advanced deep learning techniques to understand the context and meaning of search queries, BARD provides users with more accurate and relevant results. The integration of this AI into Google’s search engine represents a major step forward for search technology and will enhance the overall search experience for users. With its ability to provide users with a more comprehensive and effective search experience, this AI is poised to become the new standard in search technology.

References:

- Pichai, S. (2023, February 6). An important next step on our AI journey. Google.

- Special, S. E. T. (2023, February 8). Google introduces ChatGPT competitor Bard: Here’s how to get its access and how does it work. The Economic Times.

- Sharma, D. (2023, February 8). Google brings its ChatGPT rival Bard: here is how to access it. India Today.

- Barik, S. (2023, February 7). Bard vs ChatGPT, and the concerns with both AI chatbots. The Indian Express.